I apologize for the time it took before writing this part, but you know what they say: Better late then never.

In this part, I’ll explain how I extracted (scraped) the data from the Transports Quebec database mentioned in Part 1 using Python, Scrapy and a few other tools.

This post is not a full-fledged tutorial on using Scrapy, but it should give you a place to start if you’d like to do something similar.

In order to jump directly to the good stuff, I’ll skip the Scrapy installation and assume you are already familiar with Python. If you are not familiar with Python, you’re missing a lot. Get started here, then use some Google-Fu and start hacking on your own project to keep learning.

DISCLAIMER:

- This small project was a quick and dirty drive-by experiment. This code is not: a complete solution, the best solution, production-ready, [insert your own similar statement here]. I’m sure you can do better 🙂 (Feel free to post your comments and improvements, however, keep in mind that I don’t plan to maintain or improve this code)

- This code follows my late-night style guide.

- For non-French speaking readers: This project extracts data from a French (Quebec, Canada) website, however, you can continue to read, the actual content is not really important and you should be able to follow without problem. You may also learn some French words. 😉

- For French-speaking readers: If you are reading this, you probably won’t mind the English comments in my code. Unless required to do otherwise, I always write my code/comments in English. It’s easier to share this way.

- I’m sure you’ll find typos.

- Everything below was done using Python 2.7 and Scrapy v0.13. I have not tested with Scrapy 0.14+ or

Python 3+(UPDATE 2011/12/12: As Pablo Hoffman (none other than the lead Scrapy developer) noted in the comments, Scrapy does not support Python 3 and there are currently no plans to support it. Sorry about that!)

That said, let’s get started.

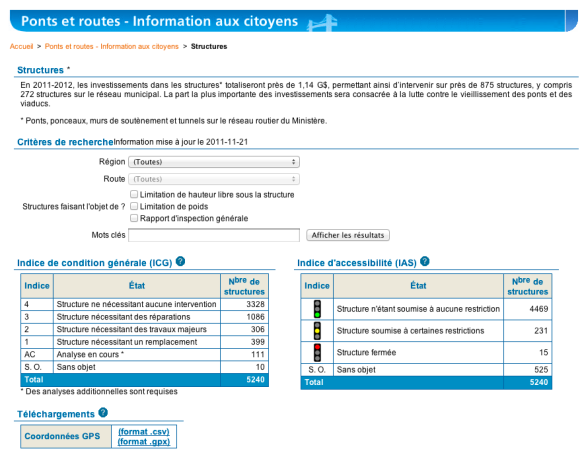

Our target: The Transports Quebec infrastructure database (Well, they call it “Ponts et routes: Information aux citoyens”.)

If you visit the website, you’ll notice that the first page you get is an empty form like this one:

So, to get to the actual data, you need to submit the form by clicking on “Afficher les résultats“. Luckily, the default form values will display all entries. After submitting the form, you’ll get results like these:

This is what our main scraper will have to deal with. Unfortunately, not all the fields we want to extract are available in this table. The rest is in what I call the “details” page. You get there by clicking on the “Fiche No” (first column) link (e.g. 00002). The details page look like this:

So for each entry found, our scraper will have to follow the “Fiche No” link and scrape the details page too.

In addition, each page of the report only contains 15 items. This means we’ll have to navigate the pages too.

Tools You’ll Need

In order to identify HTML elements to extract, you’ll need these:

- Firefox

- Firebug (Web development tools, including HTML inspector)

- Firefinder (an XPath evaluator for Firebug)

NOTE: I used these tools. You may have your own tools that you prefer. Feel free to let me know of other good ones in the comments. (No need to point out that Safari has a web inspector, I know, but I did not find anything equivalent to Firefinder.)

Getting Started

Switch to a work directory then create your base Scrapy project (I called mine mtqinfra):

$ scrapy startproject mtqinfra $ find . . ./mtqinfra ./mtqinfra/__init__.py ./mtqinfra/items.py ./mtqinfra/pipelines.py ./mtqinfra/settings.py ./mtqinfra/spiders ./mtqinfra/spiders/__init__.py ./scrapy.cfg

At this point we have a “skeleton” project. Now let’s create a very simple spider just to see if we can get this to work. (NOTE: There’s a “scrapy genspider” command but I won’t use it here.) Create the file spiders/mtqinfra_spider.py:

#!/usr/bin/env python

# encoding=utf-8

from scrapy.spider import BaseSpider

from scrapy.http import Request

from scrapy.http import FormRequest

from scrapy.selector import HtmlXPathSelector

from scrapy import log

import sys

### Kludge to set default encoding to utf-8

reload(sys)

sys.setdefaultencoding('utf-8')

class MTQInfraSpider(BaseSpider):

name = "mtqinfra"

allowed_domains = ["www.mtq.gouv.qc.ca"]

start_urls = [

"http://www.mtq.gouv.qc.ca/pls/apex/f?p=102:56:::NO:RP::"

]

def parse(self, response):

pass

Now, let’s see if this appears to work:

$ scrapy crawl mtqinfra 2011-11-22 00:52:09-0500 [scrapy] INFO: Scrapy 0.13.0 started (bot: mtqinfra) [... more here ...] 2011-11-22 00:52:10-0500 [mtqinfra] DEBUG: Redirecting (302) to <GET http://www.mtq.gouv.qc.ca/pls/apex/f?p=102:56:2747914247598050::NO:RP::> from <POST http://www.mtq.gouv.qc.ca/pls/apex/wwv_flow.accept> 2011-11-22 00:52:10-0500 [mtqinfra] DEBUG: Crawled (200) <GET http://www.mtq.gouv.qc.ca/pls/apex/f?p=102:56:2747914247598050::NO:RP::> (referer: None) 2011-11-22 00:52:10-0500 [mtqinfra] INFO: Closing spider (finished) [... more here ...]

As you can see, the bot did request our page, got redirected (Status 302) because the initial URL did not include a session ID (2747914247598050) and finally downloaded (Status 200) the page.

Posting the Form

OK, the next step if to get our bot to submit the form for us. Let’s tweak the parse method a bit and add another method to handle the real parsing like this:

def parse(self, response):

return [FormRequest.from_response(response,

callback=self.parse_main_list)]

def parse_main_list(self, response):

self.log("After submitting form.", level=log.INFO)

with open("results.html", "w") as f:

f.write(response.body)

import os

os.system("open results.html")

Now, the parse method return a “FormRequest” object that will instruct the bot to submit the form then call “self.parse_main_list” with the response.

$ scrapy crawl mtqinfra

Oops. The response contains no results. Whats wrong? Well, if you search a bit (I sniffed the network to compare what is sent by Firefox when the form button is pressed versus what is sent by Scrapy), you’ll find that the “p_request” form field is not set to “RECHR” by Scrapy as when sent by the browser. This is due to the fact that it is empty by default and set by a Javascript function. Let’s fix that:

def parse(self, response):

return [FormRequest.from_response(response,

formdata={ "p_request": "RECHR" },

callback=self.parse_main_list)]

def parse_main_list(self, response):

self.log("After submitting form.", level=log.INFO)

with open("results.html", "w") as f:

f.write(response.body)

import os

os.system("open results.html")

Ahh, much better. Now we can begin our parser.

Identifying HTML Elements and Their Corresponding XPath

Scrapy uses XPath to select and extract elements from a web page. Well, technically speaking you could parse the response body any way you want (e.g. using regular expressions), but XPath is very powerful so I suggest you give it a try.

I won’t write an XPath tutorial here, but simply put, XPath is a query language that allows you to select elements from HTML like you would do with SQL to extract fields from a table. Although XPath queries can appear intimidating at first, the XPath syntax itself is pretty simple.

Here are some tips to understand, learn and use XPath quickly and identify elements you want to extract.

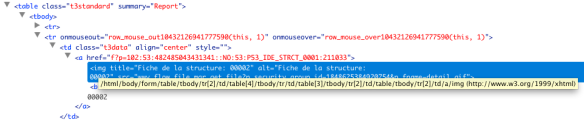

Use Firebug to identify absolute XPath expressions

In Firebug, the absolute XPath expression to select an HTML element is displayed in a tooltip:

You can also right-click an element and select “Copy XPath” to copy it to the clipboard:

Use Firefinder to test XPath expressions

In Firebug, select the Firefinder tab and enter your XPath expression (or query or filter or selector, whatever you want to call it) then click “Filter”. The matching element(s) will be listed below and highlighted on the page. Because we’ll need to loop on each result table row, try it with this expression:

//table[@id="R10432126941777590"]//table[@summary="Report"]/tbody/tr

This expression will select each row of the result table.

Use “scrapy shell” to test XPath expressions in Scrapy

Scrapy has a very handy “shell” mode to help you test stuff. In order to bypass the form submission process and get directly on the result page, submit the form with your browser and then copy the URL that includes your session ID. If you do a “GET” on this URL, you’ll get the page you were viewing in your browser (as long as the session ID is still valid). Let’s try it:

$ scrapy shell http://www.mtq.gouv.qc.ca/pls/apex/f?p=102:56:482485043431341::NO:RP::

[... MORE HERE ...]

>>> hxs.select('//table[@id="R10432126941777590"]//table[@summary="Report"]/tbody/tr[2]')

[]

>>> hxs.select('//table[@id="R10432126941777590"]//table[@summary="Report"]/tr[2]')

[<HtmlXPathSelector xpath='//table[@id="R10432126941777590"]//table[@summary="Report"]/tr[2]' data=u'<tr onmouseover="row_mouse_over104321269'>]

>>> row2 = hxs.select('//table[@id="R10432126941777590"]//table[@summary="Report"]/tr[2]')

>>> row2_cells = row2.select('td')

>>> len(row2_cells)

11

>>> row2_cells[0]

<HtmlXPathSelector xpath='td' data=u'<td class="t3data" align="center"><a hre'>

>>> row2_cells[0].extract()

u'

<a href="f?p=102:53:482485043431341::NO:53:P53_IDE_STRCT_0001:211033"><img title="Fiche de la structure: 00002" src="wwv_flow_file_mgr.get_file?p_security_group_id=1848625384920754&p_fname=detail.gif" alt="Fiche de la structure: 00002" />

00002</a>

'

>>> row2_cells[0].select('a/text()').extract()[0]

u'00002'

>>> row2_cells[0].select('a/@href').extract()[0]

u'f?p=102:53:482485043431341::NO:53:P53_IDE_STRCT_0001:211033'

IMPORTANT: Take note of lines 3 and 4. I am unsure why, but while Firefinder takes the “tbody” tag into account in XPath expressions, Scrapy does not want them. Thus, our previously working XPath returns nothing. If you remove the “tbody” tag (line 5), the expression will work and return the second row of the result table.

Line 8 shows the power of XPath and the Scrapy HtmlXPathSelector object. To extract an array of cells for row #2, on the HtmlXPathSelector for the row we simply call “select(‘td’)”.

The rest of the lines shows how to use the extract() method to extract HTML, text and attribute values.

Create XPath expressions that are general and specific at the same time

Although this does not appear to make much sense, here’s what I mean:

Take this XPath (Firefinder format, remove tbody for Scrapy):

/html/body/form/table/tbody/tr[2]/td/table[4]/tbody/tr/td/table[3]/tbody/tr[2]/td/table

It is a very specific and absolute XPath to the results table. Should the web page change just a bit (e.g., an extra row in the first table of the form or a new table to hold new information), your XPath will become invalid or point to the wrong table. Now, in the page generated by the underlying reporting logic (Oracle Application Express (APEX) in this case), we noticed that the parent table of the results table has the “id” attribute set to “R10432126941777590” and that the actual results table has the attribute “summary” set to “Report”. We can then use the following XPath to get to the same table:

//table[@id="R10432126941777590"]//table[@summary="Report"]

It is more “general” as it skips over everything but two tables. It simply says: “Get me the tables that have their “summary” attribute set to “Report” that are also “under” (in) tables that have their “id” attribute set to “R10432126941777590”. However, because the “id” is very specific (only match one table) and the “summary” is also (somewhat) specific because it only match one table inside that other table, we are unlikely to match anything else. Thats what I mean by general and specific at the same time.

Now, I don’t know Oracle APEX enough to be certain the “id” used above won’t change if the report HTML format is modified, so maybe my solution could break later in this case, however, the principle in general is still good.

Use relative XPath expressions and HtmlXPathSelector objects

Don’t use absolute XPath expressions (as mentioned above) or repeat expressions in your code. Instead use the powerful HtmlXPathSelector objects to navigate in the HTML structure using relative XPath expressions. For example this code gets you columns 1-3 of row 2 or the results table but it sucks:

hxs = HtmlXPathSelector(response)

row2_cell1 = hxs.select('/html/body/form/table/tr[2]/td/table[4]/tr/td/table[3]/tr[2]/td/table/tr[2]/td[1]')

row2_cell2 = hxs.select('/html/body/form/table/tr[2]/td/table[4]/tr/td/table[3]/tr[2]/td/table/tr[2]/td[2]')

row2_cell3 = hxs.select('/html/body/form/table/tr[2]/td/table[4]/tr/td/table[3]/tr[2]/td/table/tr[2]/td[3]')

This code does the same, but does not suck:

hxs = HtmlXPathSelector(response)

rows = hxs.select('//table[@id="R10432126941777590"]//table[@summary="Report"]/tr')

row2 = rows[1] # NOTE: rows is a Python array, indexing starts at 0

cells = row.select('td')

row2_cell1 = cells[0]

row2_cell2 = cells[1]

row2_cell3 = cells[2]

Why? Because in the first case, if anything changes in the HTML, you’ll need to modify 3 XPath expressions. In the second case, you’ll probably need to modify only one (if necessary at all). Of course, this example is simplified a bit to show you the concept (you’ll probably want to loop over rows and cells in your code as we’ll do later), but I hope you get the idea. Unfortunately, sometimes there is no (safe) way to get to an element other than by using an (almost) absolute XPath. Just try to minimize their use in your project.

The MTQInfraItem Object

The parsers that will handle responses as the website is crawled return “Item” objects (or Request objects to instruct the crawler to request more pages). “Item” objects are more or less a “model” of the data you will be scraping. It is a container for the structured data you will be extracting from the HTML pages. Edit the items.py file and replace the existing “MtqinfraItem” with this one:

class MTQInfraItem(Item):

# From main table

record_no = Field()

record_href = Field()

structure_id = Field()

structure_name = Field()

structure_type = Field()

structure_type_img_href = Field()

territorial_direction = Field()

rcm = Field()

municipality = Field()

road = Field()

obstacle = Field()

gci = Field()

ai_desc = Field()

ai_img_href = Field()

ai_code = Field()

location_href = Field()

planned_intervention = Field()

# From details

road_class = Field()

latitude = Field()

longitude = Field()

construction_year = Field()

picture_href = Field()

last_general_inspection_date = Field()

next_general_inspection_date = Field()

average_daily_flow_of_vehicles = Field()

percent_trucks = Field()

num_lanes = Field()

fusion_marker = Field()

As you can see, to create your own MTQInfraItem type, you simply subclass the Item class and add a bunch of fields that you later plan to populate and save in your output.

Scraping the Main List

Scraping the main page requires us to do the following:

- Process each row of the results table and for each one:

- Extract all the data we want to keep

- Create a new MTQInfraItem object for the data

- Save the item in a buffer because it is still incomplete as we need to scrape the “details” page to extract the rest of the fields

- Return a “Request” object to the crawler to inform it that the “details” page needs to be requested

- Check if there is another page containing results and if so, return a “Request” object to the crawler to inform it that another page of results needs to be requested.

The final parser for the main list looks like this:

def parse_main_list(self, response):

try:

# Parse the main table

hxs = HtmlXPathSelector(response)

rows = hxs.select('//table[@id="R10432126941777590"]//table[@summary="Report"]/tr')

if not rows:

self.log("Failed to extract results table from response for URL '{:s}'. Has 'id' changed?".format(response.request.url), level=log.ERROR)

return

for row in rows:

cells = row.select('td')

# Skip header

if not cells:

continue

# Check if this is the last row. It contains only one cell and we must dig in to get page info

if len(cells) == 1:

total_num_records = int(hxs.select('//table[@id="R19176911384131822"]/tr[2]/td/table/tr[8]/td[2]/text()').extract()[0])

first_record_on_page = int(cells[0].select('//span[@class="fielddata"]/text()').extract()[0].split('-')[0].strip())

last_record_on_page = int(cells[0].select('//span[@class="fielddata"]/text()').extract()[0].split('-')[1].strip())

self.log("Scraping details for records {:d} to {:d} of {:d} [{:.2f}% done].".format(first_record_on_page,

last_record_on_page, total_num_records, float(last_record_on_page)/float(total_num_records)*100), level=log.INFO)

# DEBUG: Switch check if you only want to process a certain number of records (e.g. 45)

#if last_record_on_page < 45:

if last_record_on_page < total_num_records:

page_links = cells[0].select('//a[@class="fielddata"]/@href').extract()

if len(page_links) == 1:

# On first page

next_page_href = page_links[0]

else:

next_page_href = page_links[1]

# Request to scrape next page

yield Request(url=response.request.url.split('?')[0]+'?'+next_page_href.split('?')[1], callback=self.parse_main_list)

continue

else:

# Nothing more to do

break

# Cell 1: Record # + Record HREF

record_no = cells[0].select('a/text()').extract()[0].strip()

record_relative_href = cells[0].select('a/@href').extract()[0]

record_href = response.request.url.split('?')[0]+'?'+record_relative_href.split('?')[1]

structure_id = re.sub(ur"^.+:([0-9]+)$", ur'\1', record_href)

# Cell 2: Name

structure_name = "".join(cells[1].select('.//text()').extract()).strip()

# Cell 3: Structure Type Image

structure_type = cells[2].select('img/@alt').extract()[0]

structure_type_img_relative_href = cells[2].select('img/@src').extract()[0]

structure_type_img_href = re.sub(r'/[^/]*$', r'/', response.request.url) + structure_type_img_relative_href

# Cell 4: Combined Territorial Direction + Municipality

territorial_direction = "".join(cells[3].select('b//text()').extract()).strip()

# NOTE: Municipality taken from details page as it was easier to parse.

# Cell 5: Road

road = "".join(cells[4].select('.//text()').extract()).strip()

# Cell 6: Obstacle

obstacle = "".join(cells[5].select('.//text()').extract()).strip()

# Cell 7: GCI (General Condition Index)

gci = cells[6].select('nobr/text()').extract()[0].strip()

# Cell 8: AI (Accessibility Index)

# Defaults to "no_restriction" as most records will have this code.

ai_code = 'no_restriction'

if cells[7].select('nobr/img/@alt'):

ai_desc = cells[7].select('nobr/img/@alt').extract()[0]

ai_img_relative_href = cells[7].select('nobr/img/@src').extract()[0]

ai_img_href = re.sub(r'/[^/]*$', r'/', response.request.url) + ai_img_relative_href

else:

# If no image found for AI, then code = not available

ai_code = 'na'

if cells[7].select('nobr/text()'):

# Some text was available, use it

ai_desc = cells[7].select('nobr/text()').extract()[0]

else:

ai_desc = "N/D"

# Use our own Gray trafic light hosted on CloudApp

ai_img_href = "http://cl.ly/2r2A060b1g0N0l3f1y3L/feugris.png"

# Set ai_code according to description if applicable

if re.search(ur'certaines', ai_desc, re.I):

ai_code = 'restricted'

elif re.search(ur'fermée', ai_desc, re.I):

ai_code = 'closed'

# Cell 9: Location HREF

onclick = cells[8].select('a/@onclick').extract()[0]

location_href = re.sub(ur"^javascript:pop_url\('(.+)'\);$", ur'\1', onclick)

# Cell 10: Planned Intervention

planned_intervention = "".join(cells[9].select('.//text()').extract()).strip()

# Cell 11: Report (yes/no image only) (SKIP)

item = MTQInfraItem()

item['record_no'] = record_no

item['record_href'] = record_href

item['structure_id'] = structure_id

item['structure_name'] = structure_name

item['structure_type'] = structure_type

item['structure_type_img_href'] = structure_type_img_href

item['territorial_direction'] = territorial_direction

item['road'] = road

item['obstacle'] = obstacle

item['gci'] = gci

item['ai_desc'] = ai_desc

item['ai_img_href'] = ai_img_href

item['ai_code'] = ai_code

item['location_href'] = location_href

item['planned_intervention'] = planned_intervention

self.items_buffer[structure_id] = item

# Request to scrape details

yield Request(url=record_href, callback=self.parse_details)

except Exception as e:

# Something went wrong parsing this page. Log URL so we can determine which one.

self.log("Parsing failed for URL '{:s}'".format(response.request.url), level=log.ERROR)

raise # Re-raise exception

More details for each lines:

Lines 2,105-108: We wrap our code in a try/except block to log any parsing error with our own message.

Lines 11-13: This is where we skip the header. The logic works because the table header cells are “th” tags, not “td”, so cells is None.

Lines 14-35: This is where we check if we’ve reached the last page or not. If not, we create the “Request” object for the next page.

Lines 21-22: Note the commented “if last_record_on_page < 45:” line. We’ll refer to it in the “Testing It” section below.

Lines 37-84: This is where we extract our data.

Lines 86-101: Here, we create our MTQInfraItem and set the fields we just extracted.

Line 102: Here we save our MTQInfraItem to our internal buffer so we can use it later when we parse the corresponding “details” page.

Line 104: Finally we return a “Request” object so the crawler will request the corresponding “details” page and call our “parse_details” method with the response.

Scraping the Details

I won’t post the code to scrape the “details” page here as it is mostly code similar to lines 37-84 of the previous parser. The only thing to note is that in parse_details(), we actually return the final MTQInfraItem object to the crawler so it can be sent down the pipeline.

Testing It

Before you run this puppy for the first time, you should limit the crawling to a small number of records. I used 45 records because each page has 15. This give us a reasonable sample to validate most of our code. This is where line 22 in parse_main_list() comes handy. Simply uncomment it and comment line 23 to stop processing after 45 records.

If you try to run the crawler as-is on the Transports Quebec website, you’ll probably get errors. At least I did. Apparently, the website does not process concurrent requests using the same session ID. You get an error page when you attempt to do so. By default, Scrapy will attempt to crawl websites more quickly by executing requests concurrently. To disable this completely, add the following lines to settings.py:

CONCURRENT_REQUESTS_PER_DOMAIN = 1 CONCURRENT_SPIDERS = 1

REMEMBER: Be polite. Try to minimize the impact of your scraping on the web server. Do your testing on a small number of pages until your are satisfied with your output. Don’t scrape thousand of pages, add a new field and then scrape thousand of pages again. This is particularly true if you test this project. Don’t hammer the Transports Quebec website just for fun, they will simply raise my taxes to buy a bigger server 🙂

By default if you simply run “scrapy crawl mtqinfra” Scrapy will print each item on stdout. If you want to save the output in a usable format, you can use the “-o output_file” and “–output-format=format” options. e.g.:

scrapy crawl mtqinfra --output-format=csv -o output.csv

NOTE: If you attempt to save to XML at this point, you’ll only get a bunch of exceptions because the default XML exporter only handles strings fields and our items have floats. Read on for the solution.

Generating Output Files in Different Formats

OK, you tested the crawler and you are satisfied but you want to save the output in different formats, in a format of your own or in a database. This is where pipelines and exporters come into play.

A pipeline is simply a Python object with a “process_item” method. Once added to our settings.py file, the pipeline object will be instantiated by the crawler and its “process_item” method will be called for each MTQInfraItem. You can then save the item, change it or discard it so other pipelines won’t process it.

Exporters are objects with predefined methods that can be used to persist data in a specific format. Scrapy comes with predefined exporters for CSV, JSON, LineJSON, XML, Pickle (Python) and Pretty Print. You can easily subclass these to modify some of their behavior or subclass the BaseItemExporter class to create your own exporter. On our case, we’ll do both.

Here’s what our exporters.py file looks like:

#!/usr/bin/env python

# encoding=utf-8

from scrapy.contrib.exporter import CsvItemExporter

from scrapy.contrib.exporter import JsonItemExporter

from scrapy.contrib.exporter import JsonLinesItemExporter

from scrapy.contrib.exporter import XmlItemExporter

from scrapy.contrib.exporter import BaseItemExporter

import json

import simplekml

class MTQInfraXmlItemExporter(XmlItemExporter):

def serialize_field(self, field, name, value):

# Base XML exporter expects strings only. Convert any float or int to string.

value = str(value)

return super(MTQInfraXmlItemExporter, self).serialize_field(field, name, value)

class MTQInfraJsonItemExporter(JsonItemExporter):

def __init__(self, file, **kwargs):

# Base JSON exporter does not use dont_fail=True and I want to pass JSONEncoder args.

self._configure(kwargs, dont_fail=True)

self.file = file

self.encoder = json.JSONEncoder(**kwargs)

self.first_item = True

class MTQInfraKmlItemExporter(BaseItemExporter):

def __init__(self, filename, **kwargs):

self._configure(kwargs, dont_fail=True)

self.filename = filename

self.kml = simplekml.Kml()

self.icon_styles = {}

def _escape(self, str_value):

# For now, we only deal with ampersand, the rest is properly escaped.

return str_value.replace('&', '&')

def start_exporting(self):

pass

def export_item(self, item):

# ACTUAL CODE REMOVED FOR BLOG. PLEASE CHECK GITHUB REPO FOR SOURCE.

def finish_exporting(self):

# NOTE: The KML file is over 40Mb in size. The XML serializing will take a while and will

# probably get your laptop fan to start 🙂

self.kml.save(self.filename)

The MTQInfraXmlItemExporter and MTQInfraJsonItemExporter are simply customized versions of their equivalent base Scrapy exporters. The MTQInfraKmlItemExporter is a custom exporter to save output in KML format. It uses the simplekml module. Almost all the work is done in export_item(), which is the method called for each MTQInfraItem created by our parsers. start_exporting/finish_exporting are, as their name imply, called at the start and finish and can be used to setup your exporter or finalize the export process respectively.

Our pipelines.py file contains the following:

#!/usr/bin/env python

# encoding=utf-8

from scrapy.xlib.pydispatch import dispatcher

from scrapy import signals

from scrapy.exceptions import DropItem

from scrapy.contrib.exporter import CsvItemExporter

from scrapy.contrib.exporter import JsonLinesItemExporter

# Custom exporters

from exporters import MTQInfraJsonItemExporter

from exporters import MTQInfraXmlItemExporter

from exporters import MTQInfraKmlItemExporter

import csv

class MTQInfraPipeline(object):

def __init__(self):

self.fields_to_export = [

'latitude',

'longitude',

'record_no',

# MORE FIELDS IN THE REAL FILE. REMOVED FOR BLOG.

'record_href',

'location_href',

'structure_type_img_href'

]

dispatcher.connect(self.spider_opened, signals.spider_opened)

dispatcher.connect(self.spider_closed, signals.spider_closed)

def spider_opened(self, spider):

self.csv_exporter = CsvItemExporter(open(spider.name+".csv", "w"),

fields_to_export=self.fields_to_export, quoting=csv.QUOTE_ALL)

self.json_exporter = MTQInfraJsonItemExporter(open(spider.name+".json", "w"),

fields_to_export=self.fields_to_export,

sort_keys=True, indent=4)

self.jsonlines_exporter = JsonLinesItemExporter(open(spider.name+".linejson", "w"),

fields_to_export=self.fields_to_export)

self.xml_exporter = MTQInfraXmlItemExporter(open(spider.name+".xml", "w"),

fields_to_export=self.fields_to_export,

root_element="structures", item_element="structure")

# Make a quick copy of the list

kml_fields = self.fields_to_export[:]

kml_fields.append('fusion_marker')

self.kml_exporter = MTQInfraKmlItemExporter(spider.name+".kml", fields_to_export=kml_fields)

self.csv_exporter.start_exporting()

self.json_exporter.start_exporting()

self.jsonlines_exporter.start_exporting()

self.xml_exporter.start_exporting()

self.kml_exporter.start_exporting()

def process_item(self, item, spider):

self.csv_exporter.export_item(item)

self.json_exporter.export_item(item)

self.jsonlines_exporter.export_item(item)

self.xml_exporter.export_item(item)

# Add fusion_marker to KML for use in Google Fusion Table

if item['ai_code'] == "no_restriction":

item['fusion_marker'] = "small_green"

elif item['ai_code'] == "restricted":

item['fusion_marker'] = "small_yellow"

elif item['ai_code'] == "closed":

item['fusion_marker'] = "small_red"

else:

item['fusion_marker'] = "small_blue"

self.kml_exporter.export_item(item)

return item

def spider_closed(self, spider):

self.csv_exporter.finish_exporting()

self.json_exporter.finish_exporting()

self.jsonlines_exporter.finish_exporting()

self.xml_exporter.finish_exporting()

self.kml_exporter.finish_exporting()

Some notes on the code:

Line 20 and other fields_to_export-related lines: This is used to export fields in a certain order and to exclude the fusion_marker field from all but the KML output.

Lines 29-30: These lines connect Scrapy events to our pipeline. In this case, the spider_opened and spider_closed methods will be called on “start/stop” of the spider, allowing us to setup our exporters and call their start_exporting/finish_exporting methods.

Lines 54-69: This method, as mentioned above, is called for each item created by our parsers. In turns, it calls the export_item method of each exporter.

In order for Scrapy to use our pipeline, we need to add the following lines to settings.py:

# Our do-it-all pipeline

ITEM_PIPELINES = [

'mtqinfra.pipelines.MTQInfraPipeline'

]

Running It For Real

When ready to run your scraper on thousand of pages, I suggest you add the following to settings.py:

LOG_FILE = 'mtqinfra.log'

Or use the –logfile option when running “scrapy crawl”. This will save the Scrapy output to the specified log file. If you still want to see things flowing on your terminal, do a “tail -f” on the log on another terminal, this way you get the best of both worlds.

As mentioned in “Testing It”, be polite. Try to make sure your code generate the proper output with a limited number of pages first. You don’t want to run your scraper for hours (this project does not take hours to crawl but this is an example) and then find out you forgot to include a field and need to reprocess each page.

Also, try to scrape the website during the night, when your traffic has probably less impact.

Finally, if you do run it and then realize your output has errors or needs to be changed, consider “reprocessing” your own results instead of scraping the website again (if possible). For this reason, I strongly suggest you always save your data in LineJSON format as it is super easy to reprocess. See next section for an example.

Reprocessing Results if Needed

If after scraping thousand of pages you realized you had a typo in a generated field (e.g. our KML popup), don’t rescrape the whole website again. Instead, consider reprocessing your own data. Of course, this can only be done if everything you need is already in your previously scraped data. If a field is missing completely and cannot be generated/computed, you’re out of luck.

Here’s an example of how you could reprocess previously saved LineJSON data:

###

### ADD NECESSARY MODULE IMPORTS AND/OR MODIFIED EXPORTERS/PIPELINES HERE

### SEE SAMPLE reprocess_json.py FILE IN GITHUB PROJECT FOR MORE DETAILS.

###

### Create a fake spider object with any fields/methods needed by your exporters.

class FakeSpider(object):

# Set spider name

# NOTE: Make sure you don't use the same one as the original spider because you'll

# overwrite the previous data (and with this implementation, script will fail too).

name = "mtqinfra-reprocessed"

### MAIN

# This is the previously scraped data

input_file = open("mtqinfra.linejson")

pipeline = MTQInfraPipeline()

pipeline.spider_opened(FakeSpider)

for line in input_file:

item = MTQInfraItem(json.loads(line))

pipeline.process_item(item, FakeSpider)

pipeline.spider_closed(FakeSpider)

input_file.close()

The Source Code

You can download the complete source code for the scraper on Github.

Final Note

Scrapy is a very powerful scraping framework. It does much more than what I use in this project. Have a look at the documentation to learn more.

I’ll stop here as writing this post actually took more time than coding the project itself. Yes, I’m serious. This either shows you how powerful Python+Scrapy are, or how much I suck at writing blog posts 🙂

I hope someone will find this useful. Feel free to share in the comments section.

Very nice and extensive tutorial Marco. Btw, Scrapy doesn’t support (nor plans to support) Python 3 for the moment.

Hi Pablo! Thank you very much. It means a lot coming from you! I’ve updated my post to include your note about Python 3. Thanks for open-sourcing that great framework!

Something that I find missing on the web is a good set of examples on how to use scrappy from within an existing Python code, not like running in my shell as a standalone program. Am I just stupid or that’s not the focus when using Scrappy?

I do not think its the focus of Scrapy, but depending on what you really need to do, it may still be possible (or unnecessary). The way I see it, Scrapy was made to be extended by custom code that is your “data extraction logic”, not to be an extension of an existing program. Still, the code is open-source so depending on what kind of “integration” with your program you want, you may be able to twist the framework a bit to use it more “directly”. For a top-down view, check the scripts/scrapy and cmdline.py files in the Scrapy egg. But before doing that, I suggest you have a look at http://doc.scrapy.org/en/0.14/index.html#extending-scrapy first. What are you trying to do exactly?

Actually I had a simple Python webpage serving users with some dynamic data, and part of that data would be scrapped by Scrappy from somewhere else. I didn’t want to have Scrappy spawning a shell for every request, so I thought about having the Scrappy code inside my current code (which is not fancy nor slow to run in the browser) 🙂 It seems I either missed the point of Scrappy or am I just not understanding it?

Sorry for the late reply. I assume the data you want to scrap and integrate in your webpage changes often or is simply too large of a dataset to scrap to your own database right? If so, I suppose you could, as I mentioned above, import the proper scrappy modules and in your own Python process and run the scraper from there. However the whole thing is surely not optimal if you expect your website to become popular 🙂 You should think about doing this asynchronously too so that your own page loads quickly and shows placeholder images/messages (e.g. “Loading…” spinner) for the data you are about to scrape. Maybe an option would be to have your Scrapy spider running continuously and your Python CGI/AppServer would send asynchronously requests to it. You may want to check the Scrapy Web Service http://readthedocs.org/docs/scrapy/en/latest/topics/webservice.html. Depending on the nature of the scraped data, you may also want to cache it if possible. Unfortunately, I don’t think there is a silver bullet for what you want to do. Cheers and good luck with your project!

Hello Marco, Great tutorial. The best I have ever seen!

2 Questions please 😉

1)

What are your experience with the setting: DOWNLOAD_DELAY ?

I didn’t see it in your settings file and wondered if you were using it ?

2)

If you had to run the spider with different submitted values for the form, would you write the

loop inside the spider code or simply use a Shell script with a for loop to call the same spider with different parameter (to fill the form ?) as shown below ?

Ex:/

#!/bin/sh

#!/bin/bash

#!/usr/bin/python

for j in {1..10} do

for i in {0..30} do

nohup scrapy crawl –set “Var1=$i” –set “Var2=$j” –set “Var3=Test” –set “Var4=Test2″//spiders/spiderX1_spider.py –set FEED_URI=”output_$i$j.csv” –set FEED_FORMAT=csv &

echo ‘Spider Closed’

done

echo ‘Sleeping for…’

echo $(($RANDOM%320+180))

sleep $(($RANDOM%320+180))

done

exit

————————————————————————————————

I am using this method with nohup since I am calling from a cron from a remote server but this is not efficient and creates network issues because the loop will keep creating spiders

after spiders and even when I use the random sleep to process one spider at a time, it’s a mess.

Any please would be greatly appreciated.

Kind Regards,

Jeremie

Sorry for the delay. Just happened to login to WordPress and noticed a bunch of new comments. Apparently I setup a rule that ended up moving WordPress notifications to a subfolder by mistake. [crowd cheers] — So, I’m not sure if my reply wild still be useful, but quickly: 1) I never had to use DOWNLOAD_DELAY but I did have to limit the number of concurrent spiders for that particular project. 2) I am not sure what you need/want to do exactly but I doubt I would go with this version. FYI, only the first shebang line (#!/bin/sh) in your script is necessary and useful. Still, I suppose you worked this all out since your original post, hopefully.

Scrapy for Product name extraction in retail site..

I am looking for scraping one retail site for product names. But I need to go recursively from

seed url. Is there something i can look into.

Pingback: Read Later | Pearltrees

Well, I’ve never used Python before and have the coding skills of a dyslexic slug, but I’m going to give Scrapy a go… I have a fairly simple scraping task and I can’t see anything else (cheeep) that will do it. So thanks for the tutorial!

Hi Marco, if you possibly ever-so-might just be able to help me with exporting to CSV (can’t do it!) and reading in local source HTML files rather than URLs, I’d be most grateful. I can’t promise big fees but I can promise electronics cartoons 🙂

Sorry for the delay. Had a self-inflicted issue with comment notifications. I’m not sure if you figured this out already or not. Let me know if not and I’ll see what I can do to help. I do have limited time unfortunately (which is the main reason I have not posted anything new for 6 months already) so don’t raise your hope too much 😉

Hi there Marco, ok – I’ll word my question as best as possible and see if you can help. thanks a lot 🙂

Hi Marco I’m still flat out like a lizard drinking, I’ll try to touch base soon and see if you have a few minutes to help. I’m kind of getting stuck managing to get HTML pages stored on my C drive read in and parsed. Of course, I will organise myself first before asking for your time! Ciao Ohmart

Thanks for ur code here, its very useful for me and i am very new to scrapy and trying to submit a button by passing some values.

Hello Ohmart,

Did you figure out your issue ?

merci beaucoup Marco for this blog.

Very useful, detailed, and informative.

I’m a bit late to reply, but thanks for the kind words. I’m glad you found it useful.

Hi, tutorial looks great.

2 remarks:

(1) it doesn’t work on my computer. are you sure that the webpage has not changed?

(2) that would have been nice to have smaller step by step examples, from easy to difficult, showing how to develop powerful crawlers using scrapy

any links for that?

thks

colin

Hi Colin,

Concerning #1: No I’m not sure 🙂 I did this a while ago and did not plan to support it. I checked quickly and noticed they changed the URL of the database and they do not redirect. The DB is now here: http://www.mtq.gouv.qc.ca/pls/apex/f?p=TBM:STRCT:730195454607086::NO:RP,56::

Still, I am not sure the code works as-is anymore. If you play with it and make changes to make it work, feel free to send me a pull request on Github.

As for #2: I wrote the blog post after I coded the project. I did not initially plan to make a tutorial so I really wrote about the project itself and it turned out to get quite a few hits from people simply wanting to learn Scrapy. I’m not a very good blogger (I find it hard to find time to blog and subjects to write about, but mostly time) so don’t expect anything in the short term here. I don’t know of any particular link for other tutorials. I’d have to Google that, but I’m sure you did that already 🙂

Cheers,

Marco

Hi Marco, I am going to try to get my question and an example up here in the next few months… my main problem was reading HTML pages off my C drive and parsing them. I think it works fine if there’s a URL, but not so much from files on a pc?

Hi Omar,

Just did a quick test and Scrapy supports file:// URLs. If you have similar but disconnected HTML files, simply develop a scraper for them and feed scrapy the list of file://path URLs. This should work fine.